In 1964, a University of Chicago biophysicist named John R. Platt popularized the term “strong inference” in an article of that name published in the journal Science. The idea of strong inference is that scientists should have not one hypothesis that they are attempting to prove when conducting experiments. Instead, they should test several competing hypotheses at once. The benefits of such an approach seem apparent. First, this should help scientists avoid confirmation bias. Additionally, science is most truly advanced through invalidation, not corroboration. The same may be true of DFS.

This is the 18th installment of The Labyrinthian, a series dedicated to exploring random fields of knowledge in order to give you unordinary theoretical, philosophical, strategic, and/or often rambling guidance on daily fantasy sports. Consult the introductory piece to the series for further explanation.

Turning a Negative into a Positive

One of my biology professors in college was a freshwater ecologist whose most influential article was not one that proved a new idea about pond and lake ecosystems, but disproved a faulty hypothesis that had already started to gain traction in academia. That article was published in Ecology, which for an ecologist (I imagine) is pretty exciting . . . if ecologists actually experience excitement. This professor’s findings would not have been possible if not for his use of strong inference and his willingness to run multiple kinds of tests that could produce a result that many other people would’ve considered “negative.”

One of my favorite courses in college was my second-semester organic chemistry lab, in which students were given unknown compounds and tasked with identifying them by the end of the year. To identify a compound, one would first carry out a series of “negative confirmation” tests to prove what the compound wasn’t. With merely a few simple tests, one could eliminate from further consideration perhaps 80 percent of the known compounds in the universe. And then one would continue with this program of negative confirmation until a relatively small pool of candidate compounds remained — and only then would one switch from the question “What is this not?” to “What is this?”

Throughout that exploratory process, which was imbued with the spirit of strong inference, the most important tests were those in which the answer was “No.”

The Return to NNT

In The Black Swan, Nassim Nicholas Taleb contrasts naïve empiricism with negative empiricism. For him, naïve empiricism is the desire to ask questions to which the answers will be positive: “You take past instances that corroborate your theories and you treat them as evidence.” As you might expect, Taleb finds negative empiricism much more productive:

Seeing white swans does not confirm the nonexistence of black swans. There is an exception, however: I know what statement is wrong, but not necessarily what statement is correct. If I see a black swan I can certify that all swans are not white! . . .

We get closer to the truth by negative instances, not by verification! It is misleading to build a general rule from observed facts. Contrary to conventional wisdom, our body of knowledge does not increase from a series of confirmatory observations . . .

The subtlety of real life over the books is that, in your decision making, you need be interested only in one side of the story: if you seek certainty about whether the patient has cancer, not certainty about whether he is healthy, then you might be satisfied with negative inference, since it will supply you the certainty you seek.

For Taleb, “falsification” (or proving something to be wrong) is the method by which one creates actionable knowledge and avoids the confirmation bias. Refuting is much more important than substantiating. Strong inference is not just his vision of what the true scientific method is. It’s his vision for how one should see the world.

Strong Inference in DFS

In my piece on value investing, I state that the first rule of the discipline is “Don’t lose money.” For value investors, the way that they avoid the loss of money is in bypassing bad investments, and they in turn bypass bad investments by looking to eliminate from consideration as many companies as possible. Like scientists using strong inference, value investors employ a system of analysis that is just as focused on avoiding bad companies as it is on finding good companies.

My belief is that in DFS far too many people are chasing white swans a majority of the time. They start their lineup construction process by looking for players to put in their lineups, not players not to put in their lineups. The affirmative process might be faster and easier, but in the end it probably yields less money.

Starting your slate analysis by looking for players not to use has multiple benefits (especially for cash games), the two most important of which may be these:

- Later in the process, after you’ve removed from consideration maybe 90 percent of the uninvestable players, your random odds of blindly selecting a good player for your lineups will be drastically improved.

- The semi-skeptical perspective that you develop by looking for reasons to say “No” helps you better differentiate between and prioritize the positive qualities of players when you are finally ready to start saying “Yes.”

Phrased differently: “Yes” from someone who routinely says “No” means so much more than “Yes” from someone who’s first inclination is always to say “Yes.”

In DFS, the ultimate goal is to find players to put in your lineup, so at some point you will need to find reasons to like players. The problem with starting a slate analysis by looking for players to like is that this method too easily facilitates confirmation bias. You will be much more likely to select a player you like in general but probably shouldn’t like for that particular slate. And you will be much less likely to distinguish between guys you should like and guys you should like even more. The negative confirmation of strong inference doesn’t just help us eliminate bad players. It also helps us separate the great players from those who are merely good.

Building and Using Negative Plus/Minus DFS Trends

In my piece on Black Swans, I suggest that “avoiding the great players when they don’t do well is just as important as rostering the good players when they play great.” In this statement is the spirit of negative inference, and I believe it’s the key to avoiding bad plays.

I may be biased in saying this, but I believe that the best way one can avoid bad plays is by using our Trends tool. And, specifically, one should use it to search for actionable negative Plus/Minus trends, such as the inverted DFS yield curve.

It’s not hard to create negative trends — but sometimes people struggle with it because they are oriented towards looking for players to use and not players to shun. Here’s a simple example of an actionable negative trend:

As unimpressive as we might think that they are, players who average no more than 25 minutes per game are even worse than we would expect them to be based on their salaries. Historically, they have reached their salary-adjusted expectations not even 43 percent of the time. This is the simplest of trends, but it’s highly effective. And if you wished to make it more specific, you could:

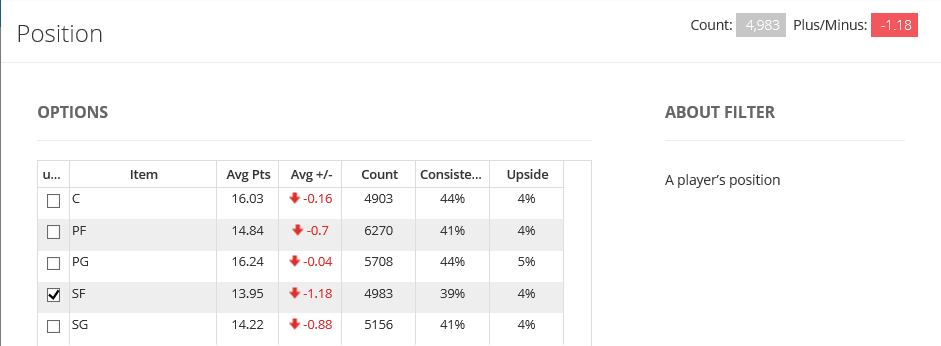

Does it make sense that small forwards are the worst players on limited minutes? Possibly. They generally lack the rebounding potential of power forwards and centers, and they usually lack the shooting skill and ball-handling opportunities of guards. I’m not saying that this narrative is golden gospel or even that this low-minute small forward trend is one that you should use.

I’m saying that it’s not hard to create negative Plus/Minus trends that make sense and that can help us eliminate a lot of likely uninvestable players from consideration.

Incorporating Negative Plus/Minus Trends into the Models

Building positive Plus/Minus trends is fun — but building and using negative Plus/Minus trends might be even more important, especially since you already have access to our Pro Trends, all of which screen for positive Plus/Minus players and can be accounted for in our Models.

What I’m going to recommend might seem crazy, but it also might be worth thinking about.

If you build positive Plus/Minus trends, your “My Trends” column in the Models will likely be little more than a rehashing of the Pro Trends column. A great deal of similarity will likely exist between the trends you create and the ones we have already created. So having a “My Trends” column comprising only positive Plus/Minus trends is probably not very useful.

What about having a “My Trends” column that has a blend of positive and negative Plus/Minus trends? That’s better — but not really, because it’s highly nonfunctional. For each individual player, you would need to dig through trends one by one to see if he were matching for positive or negative trends. That’s not reasonable.

The answer is to go contrarian and make ALL of your personal trends screen for negative Plus/Minus players. The Pro Trends will highlight the positive aspects of players, your trends will point out the red flags, and you will have built into your models a method of assessing risk that almost no one else will have.

This idea might seem counterintuitive — but so does strong inference. That something is counterintuitive doesn’t mean that it’s wrong. Often, that means that it’s something worth trying.

———

The Labyrinthian: 2016, 18

Previous installments of The Labyrinthian can be accessed via my author page. If you have suggestions on material I should know about or even write about in a future Labyrinthian, please contact me via email, [email protected], or Twitter @MattFtheOracle.