In my last article, I outlined an approach for backtesting lineups to see which metrics predict cash-game success. Today, we’ll go over the results of the backtest and talk about what might be going on with those results.

The approach in a nutshell is as follows: For each set of lineups, aggregate all of the metrics for the lineups and then use those aggregated metrics as model inputs to predict whether the lineup will hit the cash line. The inputs I’m aggregating are as follows:

— Projected points

— Opening odds

— Closing odds

— Course fit

— Course history

— Floor

— Volume index

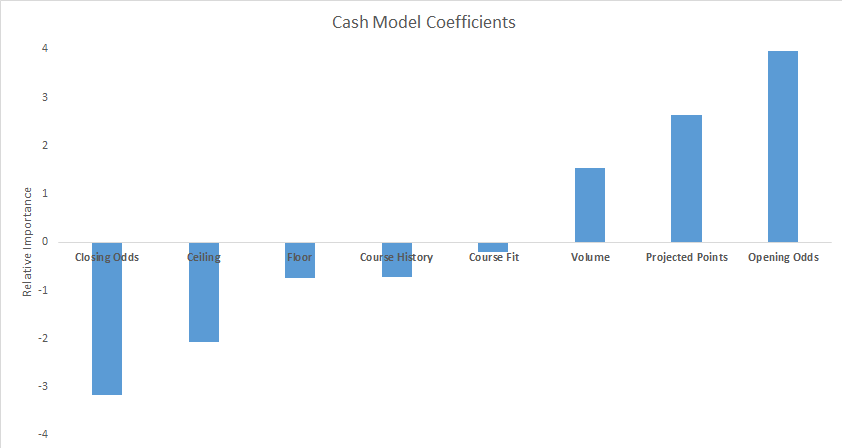

Some of these metrics, such as course fit/history, are already priced into projected points, so there is some overlap. We’ve already proven that they improve projections, but it’s still an open question as to whether they have a high-frequency, low-impact effect (i.e. cash game-friendly effect), a low-frequency, high-impact effect (tournament-friendly), or none at all, meaning that they’re properly priced into points. And because so many of these variables overlap, we’ll need to test a lot of lineups. I went with 100,000 per slate, for a total of 2.4 million unique lineups tested. Here are the coefficients:

There’s quite a bit to unpack here, so I’ll go through the most important takeaways.

Answering the Odds Question

The huge coefficients for opening and closing odds jump out, but that’s mostly a product of opening and closing odds being very similar to one another. Another way to think about the net effect of odds: It’s basically (4 * opening odds) – (3 * closing odds). The biggest thing that tells me is that opening odds have a higher importance than closing odds in predicting cash-game success. This goes a step further than my original findings: Not only does open-to-close line movement not matter, but net positive line movement, according to this equation, is actually a detriment to a cashing lineup.

As I mentioned in my original line movement article, I think that this is a prompt for further study, not a definitive conclusion. Movement from sharp money most likely is a good indicator of success instead of movement from dumb money. The trick is being able to tell the difference between the two.

Projections Matter

Projected points has the next highest coefficient magnitude after odds, which is an affirmation of the work we put into Player Models. The heart of making projections is separating out the difference between generic salary-implied points and actual projected outcomes, where they differ, and understanding how to exploit that. Talking about the theoretical difference between projected points and salary-implied points is one thing. Seeing it validated empirically is another.

The sharper you become at making your Player Models/projections/whatever, the more long-term success you’ll have. This is now proven with data.

High Ceiling Is Bad

This is a little unexpected, but not too surprising. High ceiling frequently correlates with boom-or-bust type players, and that lines up with the type of players to avoid in cash games. And, yes, floor is slightly negative, but anything with a coefficient magnitude of less than 1.0, I basically consider insignificant. It was a little surprising to see floor not matter all that much, but that effect may be captured by the aforementioned high-ceiling fade.

Volume Is The Hidden Edge

I was surprised to see volume as high as it is. Sure, it barely clears the significance threshold, but it validates the idea that low-volume players are just too much of an unknown quantity. Unknown means risk, and unnecessary risk is bad for cash games, so fading low-volume players (a.k.a. rookies) is important for cash. So many people in PGA are doing the opposite, where they’ll jump on a rookie bandwagon, but no one has looked to see if that’s a good idea until now. Fading that trend seems to be an easy way to boost your win rate.

Next week, we’ll run the same approach to see if there are any patterns for high-scoring tournament lineups.