In last week’s article, I describe how I tested over two million lineups to see which metrics correlate most often with winning cash-game lineups. Today, I’m going to do the same with guaranteed prize pools and see if there are similar metrics that drive GPP success.

First, a bit on the methodology. It’s largely the same as the one I used to analyze cash games, but instead of considering lineups above the cash line I’m looking at the top one percent of the 100,000 lineups I generated for each slate. This method doesn’t account for ownership and/or contrarianism, which is a major weakness, but it’s still a step in the right direction of understanding how to add risk and possibly gain upside in GPPs. Just like the cash game study, it’s intended to be more of a conversation starter than anything else.

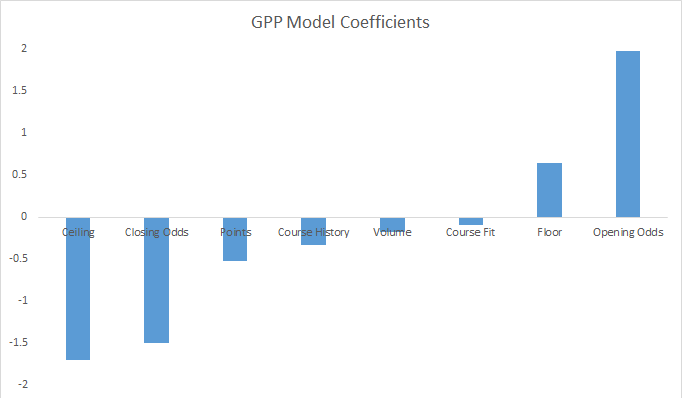

Here are the coefficients for the GPP model:

That . . . is definitely not like the last one. Let’s dive right in and see what’s going on.

GPPs Are Much Noisier

First off, a disclaimer: I reserve the right to throw out the entire study and say that finding the top percentile of lineups is too noisy an endeavor, which it still may be. Any model can try to best-fit the top one percent of lineups, but if it doesn’t beat a dart board then the coefficients are basically worthless. My crude GPP model does a little better than essentially random guesses so there’s probably some insight here, but it’s much less cut and dried than in cash games.

Opening Odds Are Still Better Than Closing Odds

I’ve covered this subject to death by now, but I’m now solidly in the camp that open-to-close line movement is a bad metric, and that’s validated by the coefficients here as well.

Floor Matters And Ceiling Doesn’t

This is easily the most surprising find of either study, since it goes directly against what I would’ve thought otherwise. Ceiling came up as a negative coefficient as well in the previous study, so I’m open to the possibility that ceiling (defined as percent of tournaments where the player exceeds 125 percent of expected points) is correlating with some other unknown player property that’s a negative indicator of success. As for upside, it’s now an open question if it’s a real and repeatable player property. If it is, all signs are pointing to the need for a more advanced metric than ceiling as I have constructed it.

On the flip side, I am sympathetic to floor mattering a lot more in PGA than perhaps other sports. If cut-making in general is a more predictable player property than going off for a huge number, I can see a line of thought that says something like, “Just try to make as many 6/6 lineups as you can and hope that at least one of them has everyone score high.” There’s nothing that says that cut-making and upside have to be equally predictable over the long run, and there’s mounting evidence that the former may be more predictable than the latter.

Projected Points Matter Way Less

This one, I’m okay with, since it’s consistent with other sports. If you have a winning GPP lineup, the difference between everyone’s projected and actual points is so high to begin with that you might as well not even bother with projections.

I’ll wrap up this series next week with concluding thoughts and and ideas for future areas of study.